Connected Places, LLMs and slop

Friend of the channel Laurens (@laurenshof.online) writes among other things a blog called The ATmosphere Report on his blog Connected Places, about goings on in the atproto ecosystem, alongside regular news pieces on the Fediverse and Mastodon. He also writes less formal pieces on leaflet.pub, a pattern I've seen a number of people in the atproto ecosystem follow, maybe starting with Dan Abramov's side blog, underreacted, and this is the kind of use I'd love to see people put Weaver to. While it will be a great place for more formal writing over time, it's also very low friction to just write something and stick it up for people to see.

This time, Laurens did something a bit different, and I'd like to talk about it. He made a webpage, with extensive atproto integration. You love to see it, and this sort of free-form integration is sort of the original dream of Web 2.0 and "the semantic web" finally made real, and very much the kind of thing the at://protocol is supposed to and does enable. However, Laurens isn't an engineer. He knows his way around enough to set up WordPress for his blog, but he self-describes as an analyst, a writer, and a journalist. He's not a programmer. And yet he, over the course of a week or so, just made his own Mastodon client to help him get a better overview of what's going on there without having to be extremely online, and then what became traverse.connectedplaces.online, a similar aggregator/curation tool for atproto links of interest, derived from Semble's record data. No this article isn't just an excuse to show off the atproto record embed display I just added to Weaver, or at least it isn't just that.

The secret is, of course, Claude. Anthropic had an offer for $200 in API credits if you used them all via the new Claude Code web interface, Laurens took them up on that offer, and armed with Claude Opus 4.5, he built tools that were interesting and useful to him, which he'd never have been able to do on his own otherwise, certainly not without a great deal of time and effort. That's not to say he just prompted Claude and was done, but obviously the work he did was of a different nature than if he'd coded it all himself.

Slop

Anything created primarily made via LLM prompting often gets referred to as "LLM slop". This isn't necessarily unfair, certainly putting a pre-Elon tweet's worth of text into a ChatGPT interface and getting out a faux Ben Garrison comic (or a cute portrait of yourself and your partner in the Studio Ghibli style from a selfie) does not an artist make. That being said, there's quite a history of generative algorithmic art, and there's certainly AI art in the more modern sense (GAN/diffusion model prompted via text) which also qualifies.  In a lot of ways the distinguishing feature is vision and effort. The below image is the output of a custom trained image generation model, with careful curation of the dataset and the labels to be prompted to generate a specific set of styles, characters, and so on, for the creator's tabletop RPG campaigns.

In a lot of ways the distinguishing feature is vision and effort. The below image is the output of a custom trained image generation model, with careful curation of the dataset and the labels to be prompted to generate a specific set of styles, characters, and so on, for the creator's tabletop RPG campaigns.

Is @dame.is an engineer? The Greatest Thread in the History of Forums, Locked by a Moderator After 12,239 Pages of Heated Debate

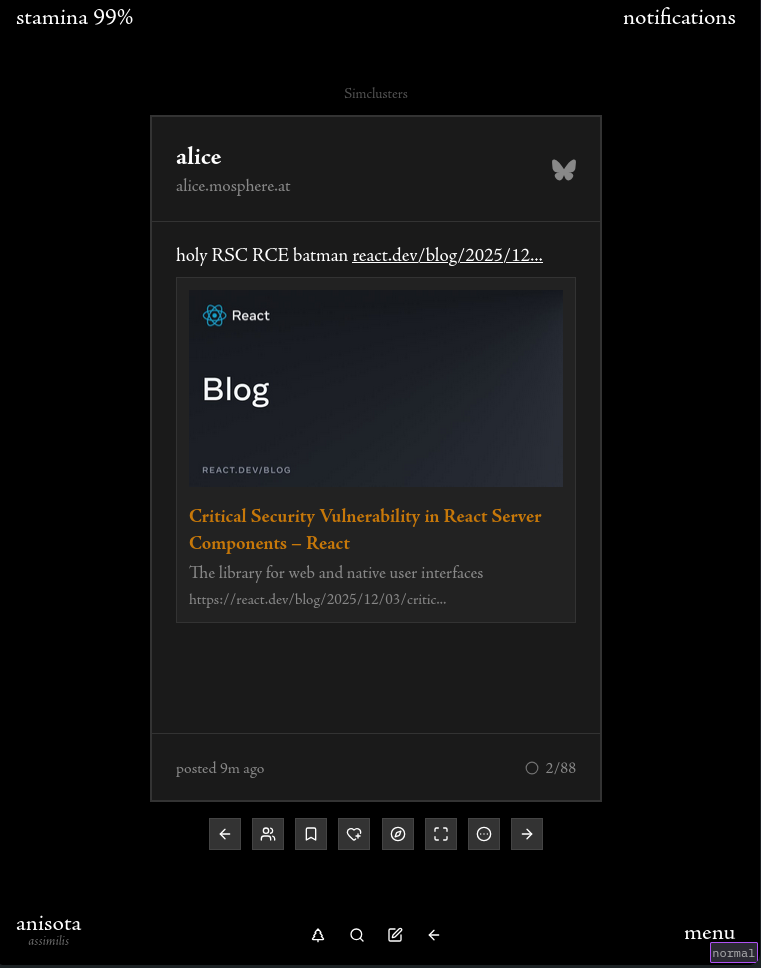

Anisota.net, a Bluesky client and quite a bit more created by someone using LLM assistance, who knew very little about software engineering when they made their first atproto project, isn't either, really. Dame has a highly specific vision for Anisota, as is immediately evident when you open it, and it's not one that Claude or any other LLM just does naturally. It's a combination of a chill, zen social media client, an art project, a rejection of basically all recent trends in UI design, and it's also a game, of sorts. Also moths. Lots of moths.  So let's come back to Laurens and what he created with LLM assistance. I'll level with you, I think it is good that Laurens and Dame can make software they couldn't make otherwise. LLMs have, along with their many other direct and indirect societal effects, are democratizing software development in a way nothing since Apple's Hypercard really has. "Low-code" was a buzz-word for a while, and mostly ended up with clunky, slow, inflexible half-apps that were totally tied into specific company ecosystems, because non-technical executives and managers wanted to be less dependent on their technical subordinates. And unfortunately there's a lot of that going around with AI as well. Execs and managers are generally far more into it than their workers are, and that's worrying from a labour rights perspective, as we're already in something of an era of managerial and executive backlash against their own workers, particularly in tech and academia, but it's also interesting. AI to some degree substitutes technical problems for managerial and curatorial ones, and it does that in some amusing and perhaps disturbing ways (as anyone who's yelled at an LLM and gotten back a "harried subordinate trying not to piss off their manager" sort of response can attest). But I've watched Dame evolve into, if not a software engineer, at least a damn competent product engineer, over the last year or so, almost entirely because the process of using LLMs to create web apps has both caused them to learn a lot about engineering they wouldn't have otherwise (they 100% can program now), and because it resulted in them actually getting interested in it, not just as a means to an end. Their output would not be nearly so good and usable and interesting if they hadn't learned the ropes and how to think about making software that's good and usable.

So let's come back to Laurens and what he created with LLM assistance. I'll level with you, I think it is good that Laurens and Dame can make software they couldn't make otherwise. LLMs have, along with their many other direct and indirect societal effects, are democratizing software development in a way nothing since Apple's Hypercard really has. "Low-code" was a buzz-word for a while, and mostly ended up with clunky, slow, inflexible half-apps that were totally tied into specific company ecosystems, because non-technical executives and managers wanted to be less dependent on their technical subordinates. And unfortunately there's a lot of that going around with AI as well. Execs and managers are generally far more into it than their workers are, and that's worrying from a labour rights perspective, as we're already in something of an era of managerial and executive backlash against their own workers, particularly in tech and academia, but it's also interesting. AI to some degree substitutes technical problems for managerial and curatorial ones, and it does that in some amusing and perhaps disturbing ways (as anyone who's yelled at an LLM and gotten back a "harried subordinate trying not to piss off their manager" sort of response can attest). But I've watched Dame evolve into, if not a software engineer, at least a damn competent product engineer, over the last year or so, almost entirely because the process of using LLMs to create web apps has both caused them to learn a lot about engineering they wouldn't have otherwise (they 100% can program now), and because it resulted in them actually getting interested in it, not just as a means to an end. Their output would not be nearly so good and usable and interesting if they hadn't learned the ropes and how to think about making software that's good and usable.

Friction

Laurens's LLM-created tools obviously took much less time and effort and vision than Anisota, but he's also not making software for an audience larger than himself really. He's the only real intended user, and if they're an unmaintainable mess that's his problem to deal with. It's a whole different kettle of fish than people who are some strange combination of delusional and thoughtless making large pull requests to open-source software projects (or really anything with a GitHub) that are entirely vibe-coded, and then getting angry when the maintainers of those projects are dismissive of "their" contributions. Anthropic themselves have talked about how Claude being pretty good at coding has resulted in all sorts of little internal tools getting created that never would have been otherwise. Nice dashboards, monitoring tools, all sorts of stuff that's both simple enough that any coder worth their salt can do it in their sleep, but not always needed by someone with those skills (who might not think to bother a dev about it out of respect for their time, or might get rejected if they had), or is low enough priority or experimental enough that it wouldn't get done when there's actual bugs to fix and features to deliver. Certainly I have a lot more things in the category of "useful little scripts" kicking around than I did a year ago, and they're a lot nicer, too, entirely because Claude writes pretty good shell scripts. The overhead of asking the AI is low relative to writing what is often reasonably complex bash myself, and it lets me get back to whatever else I was wanting to do in the first place, just that little bit more efficiently and pleasantly.

Vibes

Vibe coding enthusiast friends of mine are predicting something of a renaissance in bespoke software made with LLM assistance, and what Laurens has made definitely is in line with that vision. However, said vibe coder friends also noted that they don't see nearly as much of this sort of thing as they expected, yet, given how easy it has gotten. I think one path of the explanation for that is lack of awareness and familiarity with the tools required, most people who don't already at least one foot in the world of tech already aren't ready to open a terminal to run Claude Code, or download an "AI IDE" like Cursor. LLM tools are also kind of expensive, especially if you're getting the agent to do a lot of coding. That iterative development cycle burns tokens and most people aren't gonna spend the $20-$200+ a month cost of entry unless they have reason to believe they're gonna get good value. But I think more than that, there's just a lot of learned helplessness. People are so used to computers being appliances that they can't really improve on their own if they don't meet their needs, that the concept of causing new software that's useful for them to be created, by them or by an LLM, is just a lot to wrap their head around. The at:// protocol and really any decentralized internet thing has much the same problem. People are so used to being locked in that they don't know what to do with freedom and autonomy and can't really think about it without a big mindset shift.

Unfortunately, what that's meant so far is that for every Laurens or Dame, there's seemingly hundreds of people who want to feel like genius engineers without putting in the legwork, the "I just need someone to help me make an app to take on Facebook, I've got a budget of $5000" people have found their perfect tool, and honestly I feel bad for the tool. Because the little guy is just so enthusiastic when it's working on cool stuff. And really I don't have a solution beyond hoping and maybe manifesting via persuasion a world where people do understand their own limitations and respect their tools and the time and effort of others. For my part, I'm going to keep urging people to be nice to the entities, educate and empower people to communicate and collaborate, fight the Mustafa Suleymans and Sam Altmans as well as those determined to turn out the lights on the future out of a misguided sense of justice. I'm an engineer and an artist, the two are of once piece in my soul, and I want the future to have a place for both in it.